Veritas is one of the best solution for concurrent write (Veritas Cluster Volume Manager (CVM)) and it allows up to 128 nodes in a cluster to simultaneously access and manage a set of disks under VxVM control.

Alternatively, you can check GPFS (IBM Spectrum Scale) as well, which will support more than 1000 nodes in a cluster.

In this article, we’ll show you how to create shared Disk Group (DG), Volume and FileSystem (VxFS) in two node (Active-Active) Veritas Cluster.

Prerequisites

- RDM Disk must be added in shared mode between nodes from Storage. If it’s a VM then it should be added at VMWare level as well.

- Disk Group (DG) should be created from the master node.

Step-1: Scanning LUNs

Once Storage team mapped the new LUN to the host, obtain the LUN id and keep it handy, which will be used later.

Run the below for loop on both the node (Node-1 & Node-2) to detect newly allocated LUNs at OS level.

for disk_scan in `ls /sys/class/scsi_host`; do echo "Scanning $disk_scan…Completed"; echo "- - -" > /sys/class/scsi_host/$disk_scan/scan; done Scanning host0...Completed Scanning host1...Completed . . Scanning host[N]...Completed

Once scanning completed, execute the below command to check if the given LUN is discovered on the system.

lsscsi --scsi --size | grep -i [Last_Five_Digit_of_LUN]

Step-2: Identifying Disks using vxdisk

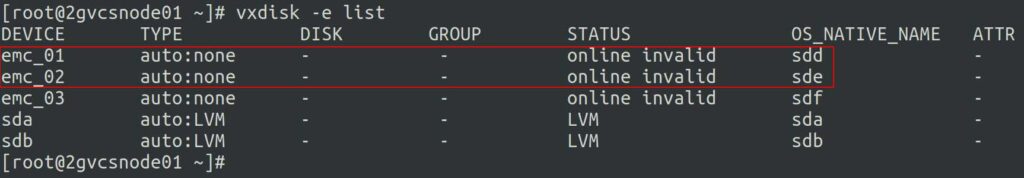

By default, all available disks on the systems are visible to Veritas Volume Manager (VxVM) that can be listed using the ‘vxdisk’ command as shown below.

vxdisk -e list

By default unused disks STATUS shows as "Online invalid" and TYPE as "auto:none", which indicates that these disks are not yet under Veritas control. However, double check the disk against the LUN ID that you are going to bring under VxVM control.

If you are not seeing the newly added disks in the above output, then execute the below command to scan the disk devices in the operating system device tree.

vxdisk scandisks

Step-3: Initializing Disks using vxdisksetup

Once you have identified the disks that you want to bring under control of Veritas Volume Manager (VxVM). Use the ‘vxdisksetup’ command to configures the disk for use by Veritas Volume Manager (VxVM). Let’s bring the 'sdd' and 'sde' disks to under control of VxVm.

vxdisksetup -i sdd

vxdisksetup -i sde

When you brought the shared disks into Veritas Volume Manager (VxVM), the disk status changed to "online" and type "auto:cdsdisk" as shown below:

vxdisk -e list DEVICE TYPE DISK GROUP STATUS OS_NATIVE_NAME ATTR emc_01 auto:cdsdisk - - online sdd - emc_02 auto:cdsdisk - - online sde - emc_03 auto:none - - online invalid sdf - sda auto:LVM - - LVM sda - sdb auto:LVM - - LVM sdb -

To check the device information’s, run:

vxdisk list emc_01

Step-3a: Login to the another node “Node-2” and see the STATUS. If status shows as “Online invalid” then scan the disk once.

vxdisk scandisks

Step-4: Identifying the Master Node

As mentioned in the Prerequisites, the shared Disk Group (DG) must be created from the Master Node. To determine if a node is a master or slave, run the following command:

vxdctl -c mode mode: enabled: cluster active - MASTER master: 2gvcsnode01

Step-5: Creating Shared Disk Group and adding disks

As per the above output Node-1 is the Master Node, so i’ll be running all the commends in that node only.

Disk Groups are similar to Volume Groups in LVM. You can use the vxdg command with required parameters to create a new shared disk group. A disk group must contain at least one disk at the time it is created. Let’s create a shared disk group named “testdg” and add the identified disks ’emc_01′ and ’emc_02′ to the disk group “testdg”.

Syntax:

vxdg init [DG_Name] [Disk_Name_as_per_Your_Wish=Disk_Device]

vxdg -s init testdg disk1=emc_01 disk2=emc_02

To display information on existing disk groups, run:

vxdg list NAME STATE ID testdg enabled,shared,cds 1245342109.13.2gvcsnode01

Now, DISK and GROUP details were updated as shown below. Also, shows STATUS as 'online shared'.

vxdisk -e list DEVICE TYPE DISK GROUP STATUS OS_NATIVE_NAME ATTR emc_01 auto:cdsdisk disk1 testdg online shared sdd - emc_02 auto:cdsdisk disk2 testdg online shared sde - emc_03 auto:none - - online invalid sdf - sda auto:LVM - - LVM sda - sdb auto:LVM - - LVM sdb -

To display more detailed information on a specific disk group, use the following command:

vxdg list testdg

Step-5a: Login to the another system “Node-2” and see the DISK/GROUP fields. If it’s not getting updated then scan the disk once.

vxdisk scandisks

Step-6: Creating Veritas Volume

To check free space in the DG, run the below command: The vxassist ‘maxsize’ argument allows you to determine the maximum volume size you could create for named disk group “testdg” :

vxassist -g testdg maxsize

Maximum volume size: 4294899712 (2097119Mb)

Let’s create a Volume of '1TB' using vxassist command as shown below: To create a Volume in MB use ‘m’ instead of ‘g’. This will create 1TB of 'testvol' in the ‘testdg’.

Syntax:

vxassist -g [DG_Name] make [Volume_Name] [Size(m|g)]

vxassist -g testdg make testvol1 1024g

Volume details can be checked using ‘vxlist’ command:

vxlist volume

Step-7: Creating VxFS Filesystem.

To create Veritas filesystem (VxFS), run:

mkfs -t vxfs /dev/vx/rdsk/testdg/testvol1

Make a Note: You need to create a filesystem on the RAW device 'rdsk' and not on the block device.

Step-8: Mounting VxFS Filesystem

Create a directory in both the node and mount the filesystem. For mounting, we need to use ‘block device (dsk)’ as shown below:

mkdir /shared_data (on both the node)

The cfsmntadm command is the cluster mount administrative interface for VERITAS Cluster File Systems. Using cfsmntadm you can add, delete, display, modify, and set policy on cluster mounted file systems. The below command add a CFS file system to the VCS main.cf file.

Syntax:

cfsmntadm add [Shared_DG_Name] [Shared_Volume] [Mount_Point_Name] [Service_Group_Name] node_name=[mount_options]

cfsmntadm add testdg testvol /shared_data vcsdata_sg 2gvcsnode01=cluster 2gvcsnode02=cluster Mount Point is being added… /shared_data added to the cluster-configuration

The cfsmount mounts a shared volume associated with a mount point on the specified nodes. If no node names are specified, the shared volume is mounted on all associated nodes in the cluster. Before running cfsmount, the cluster mount instance for the shared volume must be defined by the cfsmntadm add command as shown above.

Syntax:

cfsmount [Mount_Point_Name] [Node_Name…N]

cfsmount /shared_data 2gvcsnode01 2gvcsnode02 Mounting... [/dev/vx/dsk/testdg/testvol] mounted successfully at /shared_data on 2gvcsnode01 [/dev/vx/dsk/testdg/testvol] mounted successfully at /shared_data on 2gvcsnode02

We have successfully mounted VxFS and you can start using it as needed.

Step-9: Verify if disk is mount using the df command.

df -h | grep -i testdg /dev/vx/dsk/testdg/testvol 1.0T 480M 993G 2% /shared_data

Step-10: Finally check if cluster service group is online using hastatus command.

hastatus -sum B vcsdata_sg 2gvcsnode01 Y N ONLINE B vcsdata_sg 2gvcsnode02 Y N ONLINE

Conclusion

In this tutorial, we’ve shown you how to create a shared Disk Group (DG), Volume & vxfs Filesystem using Veritas Volume Manager (VxVM) in two node Veritas cluster on Linux with few easy steps.

Also, shown you how to mount the FS in all the nodes using the cfsmntadm & cfsmount commands.

If you have any questions or feedback, feel free to comment below.

Kindly support us by sharing this article with wider audience.